Real Time Transcription and Subtitles

Project information

- Category: Application

- Project date: April, 2024

- Languages/Libraries: Python (OpenAI, OpenCV, Sockets, Threading)

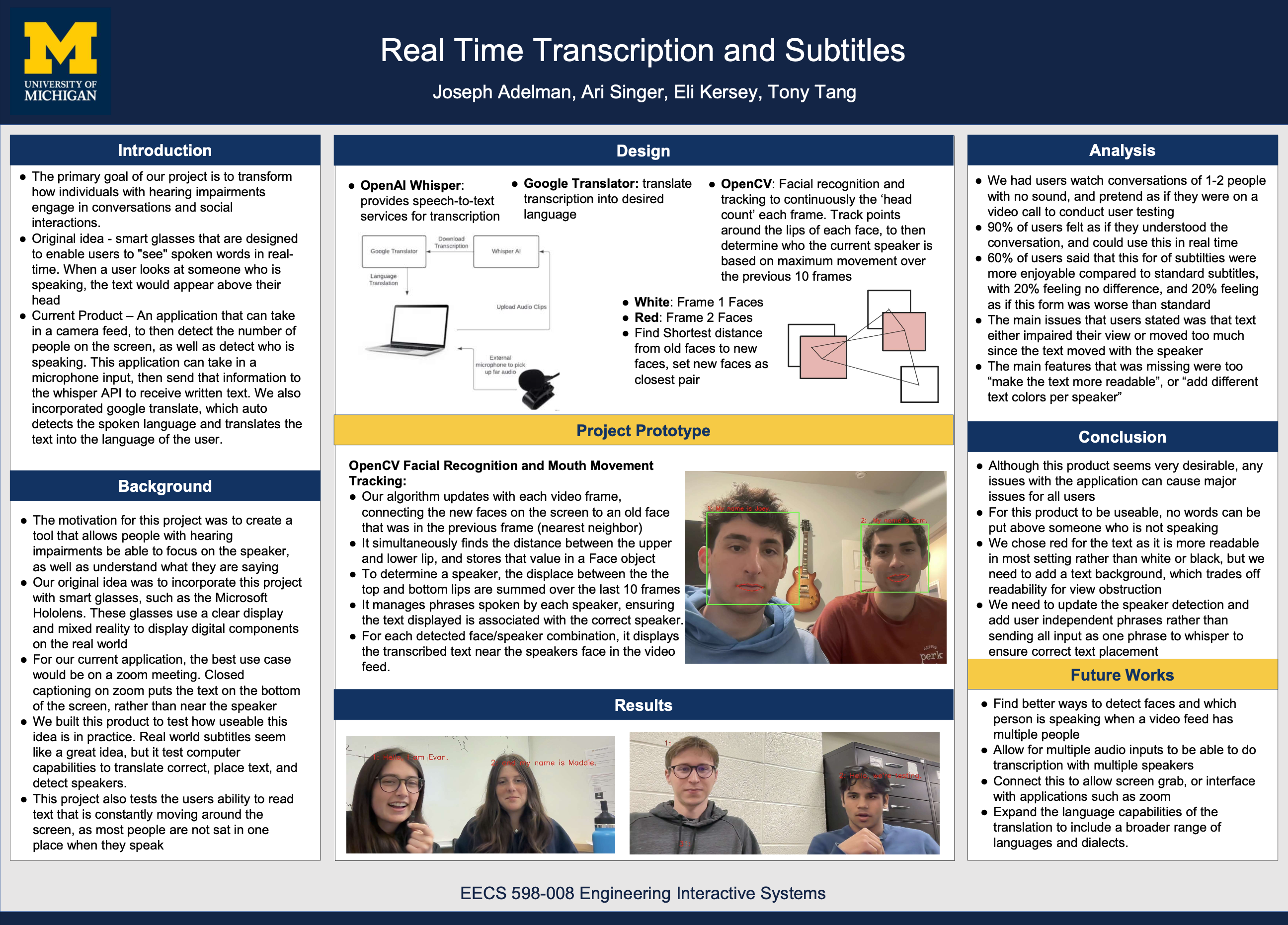

Summary: An innovative application designed to enhance

communication accessibility, particularly for those with hearing impairments.

The essence of our project is a real-time transcription and subtitle system

that identifies and transcribes spoken words during live conversations.

The system we engineered utilizes OpenAI's Whisper API for

accurate speech-to-text services. To pair the transcribed text with the

correct speaker, we integrated OpenCV for facial recognition and movement tracking.

This not only distinguishes who is speaking but also tracks the speaker's mouth

movements to sync the transcriptions dynamically.

In developing this tool, we focused on creating a seamless user experience

where the transcribed text is displayed above the speaker's face in the video

feed. This approach ensures clear communication without the need for additional hardware.

Throughout the development process, we refined our prototype through user feedback,

focusing on improving readability and minimizing visual obstruction. Our commitment

to innovation has set the stage for future enhancements, including more sophisticated

speaker detection, expanded language capabilities and collaboration with meeting platforms such as Zoom.